What morals do intelligent machines have and need?

Sometimes it’s the questions, rather than the answers, that show how the world is changing. For example, questions about the moral consequences of machines and computers becoming more intelligent. A group of ETH students have tackled this issue.

It's clear that robots are machines and not people. For one thing, they're built to relieve people from purely mechanical or repetitive work. Their intelligence is not natural, but is controlled by computer programs.

However, as a result of advances in artificial intelligence, machine learning and self-learning algorithms, the scope of application for machines is expanding. In industry and in ETH Zurich’s research laboratories, intelligent robots are now being developed and used for transport, health care and manufacturing applications, and you already can find offers for security, maintenance and service robots on the market. What’s more, there are now also less obvious uses for artificial intelligence, such as for financial trading, social networks and digital advertising.

Various ETH Zurich institutes, laboratories and spin-offs are involved in the development of independent robots and are helping to turn Zurich into what the Neue Zürcher Zeitung calls a "external page robot capital".

How well can a machine make decisions?

Even if intelligent machines cannot out-think people and mostly take on routine tasks at the moment, their advent still raises questions. If man-made machines can "learn" and "decide", what happens when their actions and decisions don’t fit a human judgement of "good"? Who is then responsible for them? Who is liable for any damage they do?

Such moral and judicial questions are raised by self-driving vehicles and war robots (see the external page Tages-Anzeiger from 20 March 2017). Who decides whether an intelligent weapons system shoots or not? Can a weapons system differentiate between a combat situation and a pause in the battle, between foreign soldiers and civilians? How does a self-driving car decide what to do if a child suddenly jumps into the road, but swerving would mean endangering a larger group of people? Fully autonomous weapons and cars do not yet exist, but there are also more pressing questions: who is responsible for mistakes in large organisations or complex networks, where both people and machines are at work?

It's always a person, some say. Others argue that we need to establish an additional legal status for “digital persons” in such cases. Is this a case for legislative action, or should measures be based on fiscal considerations? For example, should there be a tax on the added value which robots generate, particularly if people lose their jobs as a result?

These are questions which until recently seemed to be the preserve of science fiction. Lately, however, renowned journals such as external page Nature, external page The Economist, external page Fortune or external page heise online have also raised the question of how moral machines should be and what ethics are required of artificial intelligence. These questions are also gaining political weight, as shown by parliamentary motions in Switzerland and external page in Europe; the European external page resolution concerns legal regulations for robotics as well as a code of ethics for robotics engineers.

Robotics – an issue of ethics

ETH Zurich also has initiatives – such as in education – which reflect these scientific activities: for example, the Critical Thinking Initiative and Cortona Week, or the Master’s programme in the History and Philosophy of Knowledge. Within this environment, ETH students and doctoral candidates from the humanities, engineering and computer sciences have joined forces to create the external page Robotics and Philosophy project. In February, they organised a workshop focused on the ethical implications of intelligent machines and robots, and invited experts on the ethics of artificial intelligence, such as cognitive scientist and "external page robot whisperer" Joanna Bryson, philosopher of technology Peter Asaro and ETH historian of ideas Vanessa Rampton. Lisa Schurrer and Naveen Shamsudhin are part of the project.

Broaden your perspective

Naveen Shamsudhin has just completed his PhD at the Multi-Scale Robotics Lab; his doctoral work was on microrobotic tools for investigating plant growth mechanics. In 2009, he came to ETH Zurich on a federal scholarship (ESKAS) and completed a Master’s in Micro and Nanosystems at the Automatic Control Laboratory.

“Technological development is challenging and as creators, we identify very strongly with our creations,” says Shamsudhin, “but sometimes – alarmingly often, in my opinion – we lose the wider perspective and don’t reflect enough on why and for whom the technology is being developed.” The spread of new technologies through society may solve some of its problems, but it can also cause new ones or lead to new inequalities, he says. Engaging in transdisciplinary projects is therefore very important for creating a broader professional perspective.

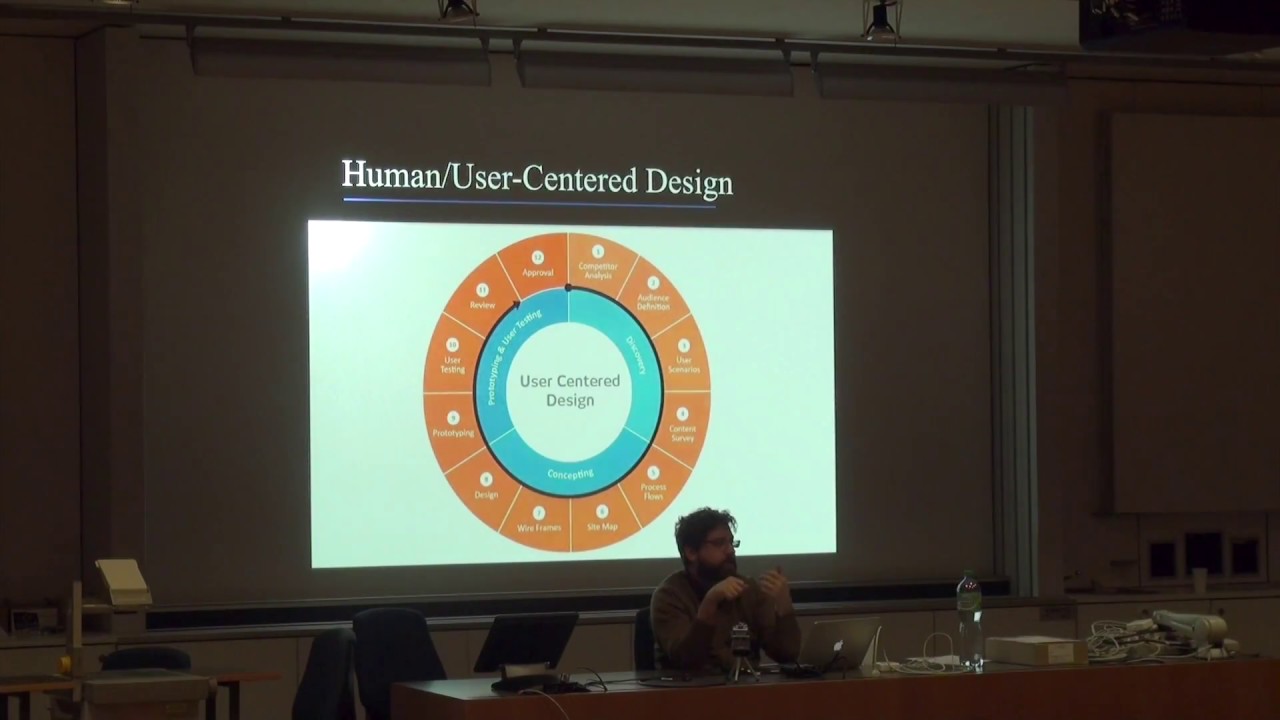

Lisa Schurrer, a Master’s student in the History and Philosophy of Knowledge course, also values the exchanges with engineers and computer scientists. “For philosophers, conversations with engineers are a reality check about how realistic certain theoretical assumptions are and what can actually be implemented technologically.” She considers it important that ethical reflection starts during the initial design phase rather than only taking place at the end of a development process.

It will not be easy to translate ethical principles into programming languages. Robots are predominantly geared towards mastering routine situations, but ethical behaviour involves more than simply following rules. People have a kind of moral sense of what they should and shouldn’t do in particular situations, even unusual ones. Whether robots and computers will ever develop a similar situational moral instinct is a very open and ethically debatable question – after all, even “compassionate” robots would also collect data that could invade people’s privacy.

More videos from the workshop on external page roboethics